Sleep easier in 2026: see how AI wealth agents automate saving, investing, and risk checks, plus safeguards that keep you in control.

In December 2025, personal finance is changing fast. People feel drained by endless money tasks. Markets still feel tense. Everyday costs still feel heavy. At the same time, AI is moving from hype to practical action.

That shift is the real story behind the financial autopilot. A modern AI agent can monitor your money life, spot patterns, and suggest smart moves. In some products, it can also run a controlled sequence of steps. That sounds like a breakthrough. It can also feel unsettling.

So this guide treats financial autopilot like a serious tool, not a toy. It explains what AI agents really do, where the proven benefits are, and which risks are immediate and vital to manage. Finally, it gives you a practical framework for 2026, so you can enjoy the rewarding upside while you sleep, without losing the steering wheel.

What “financial autopilot” really means in 2026

Financial autopilot is not one app. It is a connected system of habits, rules, and automation.

At a basic level, autopilot means your finances run on default rules. Money moves without daily effort. Bills get paid. Savings accumulate. Investing happens on schedule. However, the 2026 version is stronger because it is agent driven. An AI agent can coordinate tasks across tools, which is a genuine breakthrough.

Instead of one rule, you get a workflow. It detects income and bills, forecasts cash needs, allocates money to goals, executes approved transfers or investments, then monitors risk and alerts you. Additionally, the best systems explain decisions in plain language. That clarity is essential. A black box is not trustworthy or authentic.

The key difference: an agent acts, not just advises

Many apps can show charts and send alerts. Far fewer can plan a sequence, then carry it out safely.

A true agentic setup usually does three things. First, the agent observes by reading data. Next, it decides using goals and guardrails. Finally, it acts using tools to execute steps. That final step creates the most powerful value. It also creates the most critical risk, so it must be tightly controlled.

Autopilot is a spectrum, not a switch

Not everyone should use full automation. A smarter approach is to pick a level that passes your sleep test.

Low automation focuses on alerts, reminders, and simple summaries. Medium automation adds rule based transfers, automatic investing, and scheduled bill pay. High automation can add goal based rebalancing and cash optimization across accounts.

Furthermore, you can mix levels. You can automate savings but keep investing manual. You can automate bills but require approval for transfers. This flexible approach is practical, calming, and safer.

Why this shift is happening now

The rise of autopilot is not just about better software. It is also about emotion, incentives, and real world pressure.

People want relief from money stress

Money tasks are relentless. Tracking spending is exhausting. Decision fatigue is real. Many people feel anxious or guilty when they avoid it.

Autopilot can reduce that pain. It turns financial care into a routine you can sustain. That routine can feel stabilizing, empowering, and even hopeful. When it works well, it feels like a quiet, rewarding win every week.

Higher yields changed the value of idle cash

When yields rise, cash placement matters more. A small change in where cash sits can have a visible impact over months.

Consequently, “cash intelligence” became a hot feature. Many platforms now focus on optimizing buffers. They aim to keep enough cash for safety, while moving the rest toward goals. This is not flashy, but it can be surprisingly profitable in the long run, even if nothing is guaranteed.

AI tools became easier to connect and orchestrate

The modern finance stack is full of APIs. Banks, brokerages, payroll, and budgeting tools can connect.

Meanwhile, AI got better at reading messy data. It can classify transactions, summarize account activity, and draft a plan you understand. So the agent layer can sit on top of your existing financial life. It does not need to replace everything at once. That speed is exciting, disruptive, and, at times, overwhelming.

How AI wealth agents work behind the scenes

You do not need to code. You need a clear mental model and strong guardrails.

Data layer: what the agent can see

An agent can only act on what it can read. Typical inputs include transactions and balances, bills and subscriptions, payroll deposits, investment holdings, and goals you set.

However, more data is not always better. The safest products request only what is essential. Less access can be a powerful safety feature and a reassuring sign of mature design.

Rules layer: your goals become guardrails

A good agent is not free to do anything. It obeys rules you set.

These guardrails should cover a minimum cash buffer, a maximum transfer per day, limits on trading behavior, and approvals for large actions. Additionally, a safer design includes a big, obvious pause button. That pause must be instant and easy. It should never be hidden.

Action layer: how money actually moves

When an agent acts, it typically uses standard rails like ACH transfers, broker orders, and bill pay. The difference is timing and coordination. The agent can choose when to run the workflow and adapt when something changes, like a new bill or an income shift.

Consequently, action logs are critical. Every serious system should show what it did, when it did it, why it did it, and what data triggered it. A system without clear logs is a dangerous system.

The proven use cases that matter most in 2026

Not all automation is equal. Some uses are high value and low drama. Others are high risk and high regret.

Cash flow autopilot: the most immediate win

Cash flow is where many households struggle. Autopilot can feel like a lifesaver here.

A strong cash flow agent can forecast upcoming bills, warn about low balances, move cash from a buffer account, and prevent overdrafts. Furthermore, it can reduce late fees and reduce stress. Those are immediate, practical, and deeply motivating benefits.

Goal based saving: the quiet compounding machine

Saving is often a habit problem, not a knowledge problem. A good agent makes saving automatic.

It can split income into goal buckets, increase saving when income rises, and ease off when bills spike, so you avoid overdrafts. This is not glamorous. Yet it can be powerful, consistent, and confidence building.

Hands off investing: disciplined, not dramatic

Investing autopilot is not about predicting the market. It is about staying steady.

In 2026, many platforms emphasize automated diversified investing, scheduled contributions, and periodic rebalancing. Additionally, some propose tax aware moves for taxable accounts. That can be profitable over time, but only when it is transparent, optional, and aligned with your risk level.

Continuous risk monitoring: calmer protection

Humans are bad at monitoring. We miss warnings. We ignore small problems until they become a crisis.

Agents can do constant monitoring. They can flag unusual spending, detect suspicious transfers, and alert on new devices or logins. That feels reassuring. It is also a vital safety layer in an era of aggressive fraud.

Where banks and wealth firms are going in 2026

Consumer apps are only one side. The other side is what banks and wealth firms are quietly building.

Banks are piloting agentic experiences

In 2026, banks want to be your primary financial home. So they are moving from static apps toward assistant features.

Expect budgeting assistants, automated saving nudges, and account monitoring agents. However, regulators are cautious. Autonomy increases risk. Speed increases risk. Governance becomes essential and non negotiable.

Wealth management is becoming a hybrid model

A full robo service can be cheap. A human advisor can be expensive. Many firms are aiming for a hybrid.

The hybrid model is simple. AI handles routine workflows. Humans handle complex planning and emotional coaching during market stress. Additionally, AI can draft meeting notes and highlight life event triggers, which can make an advisory team feel stronger and more responsive.

Consequently, in 2026 you may see “AI powered advisor” become a standard label. Still, labels are not proof. Controls and accountability are what make a product trustworthy.

The critical risks you cannot ignore

This is where a sober mindset matters. Benefits do not cancel risks.

Confident errors and hallucinations

Modern AI can sound certain. It can still be wrong.

If an agent only summarizes, errors are annoying. If it executes trades, errors can be expensive. Therefore, action must be constrained. A safe system separates language from execution, uses hard rules for actions, and requires approvals for sensitive steps.

Conflicts of interest can hide inside recommendations

A platform might benefit when you trade more. It might benefit when you pick a higher fee product. It might benefit when you borrow. That incentive problem is not new. AI can amplify it by making nudges feel personal and comforting.

Consequently, ask direct questions. How does the platform earn money. Check whether it receives commissions or revenue sharing. See if it steers users toward in house products. Confirm that incentives are disclosed clearly. Transparency is a trust feature. Without it, nothing feels verified.

Privacy and cybersecurity risks

Autopilot needs access. Access creates exposure. A breach can leak identity and enable theft.

So security is essential. Look for strong multi factor authentication, device verification, transfer limits, instant alerts, and easy revocation of access. Additionally, prefer systems that minimize data collection. Data minimization is a powerful defense.

Herding and systemic fragility

If many agents follow similar rules, they can move together. That can amplify volatility. It can also create crowded behaviors.

Even if you are a small user, ecosystem effects matter. Consequently, serious firms test stress scenarios, simulate outages, and monitor concentration risk in their vendor stack. This is boring work. It is also critical work.

Regulation and oversight: what changes for 2026

Rules shape your protections. In 2026, oversight is evolving and becoming more direct.

The EU AI Act timeline pressures global firms

Europe is rolling out a phased approach. Some obligations started applying in 2025. More obligations arrive in 2026.

That matters because global platforms prefer one governance model. They often build to the strictest rule set. So EU timelines can influence product design worldwide, even for non European customers.

US oversight remains fragmented

In the US, oversight involves multiple bodies across securities, banking, and consumer protection. This can create uncertainty. It can also create gaps.

Therefore, your own guardrails remain vital. Consumer caution is still essential, even when the marketing looks polished and confident.

The practical takeaway

Regulation is a tailwind for safer design. It is not a guarantee of safety.

In 2026, the winners will invest in governance. Winning teams will show controls. Strong teams will show clear logs. Serious teams will show accountability. Over time, they build trust through discipline.

How to choose a financial autopilot in 2026

You can evaluate any platform with a simple checklist. This is practical, not technical.

Start with permissions and limits

Ask what the system can do, then set limits.

A safer first setup uses read only access at first, approvals for transfers and trades, daily caps on automated moves, and a visible pause button. This approach is calm. It is proven. It is also protective.

Demand an audit trail

A serious product should show a history feed that lists the action, time, trigger, amount, and reason.

If the platform cannot provide this, it is not ready for trust. That is a critical red flag.

Look for “shadow mode” or simulation

Some tools let the agent propose actions without executing them. That feature is priceless.

Use it for 30 days. Compare suggestions with your own judgment. Watch how it handles edge cases, like irregular income or a surprise bill. Furthermore, test how it responds when you override it. A safe system should adapt without punishing you.

Check disclosures and conflicts

Read disclosures, even if it feels tedious.

Look for fees and spreads, revenue sharing, product steering, and data sharing with partners. A verified platform will be clear. It will not hide behind vague language.

A safer 2026 setup: step by step

This playbook is designed to protect you while you gain the biggest benefits.

Step 1: Define your minimum safety buffer

Pick a buffer level that lets you breathe. Many people start with one month of expenses. Some prefer three months.

Your buffer is your sleep insurance. It prevents panic when surprises hit. That peace is priceless.

Step 2: Automate the boring flows first

Start with bill pay, savings transfers, and emergency fund building. Avoid complex trading at first. Build trust slowly. Slow and steady can be surprisingly successful.

Step 3: Use autopilot for diversified investing only

If you invest, keep it simple. Use broad index exposure, regular contributions, and rebalancing with clear thresholds.

Avoid anything that promises dramatic gains. That language is often a trap. Consistency is more reliable than hype.

Step 4: Set alerts for anything that matters

Alerts are not noise when they are configured well.

Set alerts for any transfer above a threshold, any new device login, any withdrawal from a brokerage, and any bill that jumps unusually. Additionally, require confirmations for sensitive actions. This is vital.

Step 5: Review monthly, not daily

Autopilot reduces daily effort. It should not eliminate oversight.

Review once per month. Check cash buffer health, spending categories, investment allocations, and agent action logs. This monthly review is a proven rhythm. It is calm. It is sustainable.

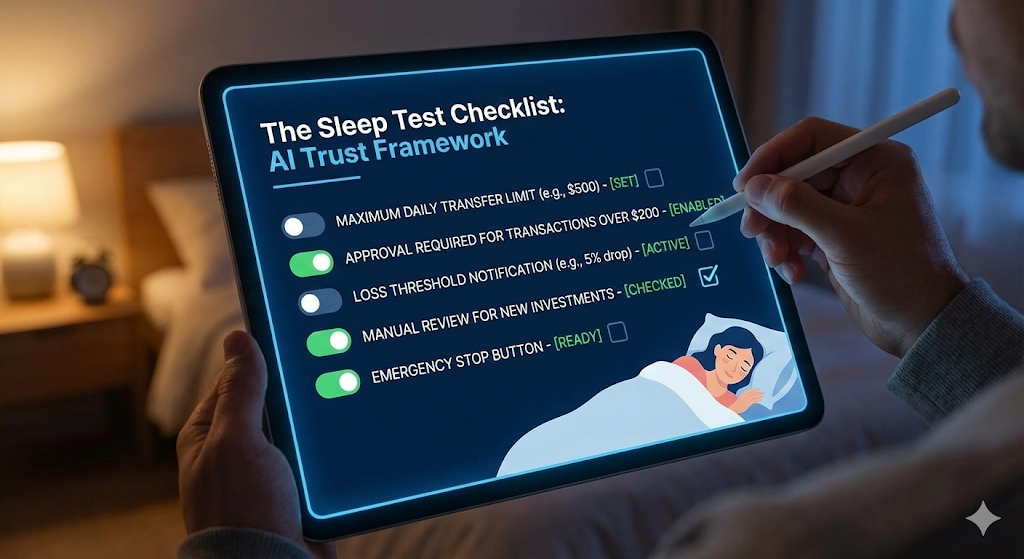

The “sleep test”: a framework for trust

Here is a simple question. It is also brutally honest.

Imagine waking up tomorrow and the agent made a mistake. Ask what the worst case loss could be. When that loss is small, you can sleep. When it is large, change your settings.

Set a maximum loss you can accept

Pick a number that would hurt, but not break you. Then configure limits so the agent cannot exceed it in a single night. This is one of the most powerful controls you can set. It is immediate. It is vital.

Make reversibility a design requirement

A safer action is reversible. A risky action is irreversible.

Moving money between your own accounts is often reversible. Pausing subscriptions is often reversible. Selling a large position is often less reversible. So require approval for irreversible actions. That rule is simple, protective, and essential.

Two real videos to understand this shift

These videos help you see what the industry is building. They also highlight governance themes and practical risks.

What to expect next: the 2026 trends that matter

Predictions are never guaranteed. Still, several trends look strong and urgent.

More agents will be embedded inside trusted platforms

Many users will not adopt brand new startups. They will adopt features inside banks and brokerages they already use.

So expect autopilot toggles inside banking apps, AI driven budgeting inside card apps, and agent features inside wealth portals. Additionally, expect tighter permission systems. Trust requires controls. That is the direction.

Governance will become a competitive advantage

In 2026, firms will compete on trust.

Expect clearer logs, better explanations, stricter constraints, and more direct accountability. Meanwhile, regulators will keep pushing for safe design. The old “move fast and break things” playbook is not compatible with consumer finance.

Personalization will rise, so user control must rise too

Personalization can be helpful. It can also be manipulative.

A friendly agent that knows your habits can push you. It can also exploit you if incentives are misaligned. Therefore, “why am I seeing this” explanations will become crucial. Consent controls will matter more. Data sharing settings will become a core feature, not an afterthought.

Signals and statistics from 2024 to 2025 that shape 2026

This section is near the end on purpose. It collects recent signals that inform the 2026 outlook.

Wealth and asset managers are exploring agentic AI

A 2025 EY survey reported that 78% of surveyed wealth and asset management firms were actively identifying agentic AI opportunities. That points to strong momentum moving into 2026. (EY)

Banks are running customer facing trials for early 2026

In December 2025, Reuters reported that British banks were working with the FCA on customer facing trials of agentic AI expected to launch in early 2026, while the FCA highlighted new governance and stability risks from autonomy and speed. (Reuters)

Global financial stability bodies are flagging AI vulnerabilities

The Financial Stability Board has emphasized that AI adoption can create vulnerabilities, including third party dependencies, cyber risk, and governance challenges that can matter for financial stability. (Financial Stability Board)

Scaling is still hard, even as AI use spreads

McKinsey’s 2025 survey reported that 88% of respondents said their organizations use AI in at least one business function, while the same survey noted that most organizations still have not scaled AI. That gap matters for 2026 because consumer products can move faster than institutional controls. (McKinsey & Company)

The EU AI Act timeline increases pressure in 2026

The European Commission’s implementation timeline shows phased milestones already applying in 2025 and further applicability arriving in 2026, which encourages stricter governance for higher impact AI uses. (AI Act Service Desk)

Conclusion: Autopilot can be powerful, if you stay the pilot

Financial autopilot in 2026 can be a breakthrough for busy people. Autopilot can reduce stress. It can keep bills paid. Savings can grow quietly. Disciplined investing can stay on track.

However, autonomy creates risk. Confident errors are real. Incentives can be misaligned. Cyber threats are constant. Governance is essential.

So aim for a calm, verified setup. Start with low risk automation. Use strict limits. Require approvals for sensitive actions. Demand logs and transparency. Review monthly, not obsessively.

If you do that, you can enjoy the rewarding benefits of AI assistance while you sleep, without giving up control of your future.

Sources and References

- GenAI in Wealth & Asset Management Survey 2025 (EY)

- Agentic AI race by British banks raises new risks for regulator (Reuters, Dec 2025)

- Monitoring Adoption of AI and Related Vulnerabilities in the Financial Sector (FSB, Oct 2025)

- The Financial Stability Implications of Artificial Intelligence (FSB, Nov 2024)

- AI Act: Regulatory framework and application timeline (European Commission)

- EU AI Act Implementation Timeline (European Commission service desk)

- The State of AI: Global Survey 2025 (McKinsey)

- Global Financial Stability Report, Oct 2024 (IMF)

- Conflicts of Interest Associated with Predictive Data Analytics, Withdrawal (SEC, Jun 2025)

- AI Applications in the Securities Industry (FINRA)