2026 boards face AI hallucination claims. See how D&O insurance is changing, where exclusions hide, and what urgent governance steps cut liability.

Why this risk feels “new” in 2026

AI moved from “pilot project” to “core product” fast. That shift is exciting. It is also risky. In 2026, more companies will let AI speak directly to customers, patients, and investors. That single decision changes the legal temperature of a business.

Here is the uncomfortable truth. An AI system can say something wrong with total confidence. It can do it at scale. It can do it repeatedly. That combination is exactly what plaintiff lawyers love. It is also what underwriters fear.

Directors and officers insurance was built for classic corporate threats. Think securities suits, derivative actions, and regulatory probes. Now insurers see a fresh frontier. The board is being asked a blunt question: did you govern the AI, or did you “plug it in” and hope? Allianz’s 2026 D&O report flags AI as a growing source of D&O exposure and points to a notable rise in AI related filings and “AI washing” allegations. (Allianz Commercial)

However, “AI hallucination” is not just a tech issue. It is a governance issue. It is a disclosure issue. It is an internal controls issue. Consequently, it is becoming a D&O issue.

What counts as “AI hallucination” in the real world

A hallucination is not a funny glitch. It is an output that sounds credible but is wrong, invented, or unsupported. That can look harmless in a chatbot that recommends restaurants. It looks catastrophic in a bot that gives medical guidance, pricing guidance, lending guidance, or compliance guidance.

The key legal twist is simple. Courts and regulators do not care that the AI is probabilistic. They care that the company chose to deploy it. They also care about what the company promised it could do.

[📷 Image: Simple diagram of how an LLM can generate confident but incorrect output, and where human review can intercept it]

Additionally, many firms now market AI as “trustworthy,” “safe,” or “verified.” That language can become exhibit A later. If the AI then outputs harmful errors, plaintiffs can argue the board failed to oversee a known risk, or misled the market about controls.

Why boards get pulled in, not just engineers

Engineers build. Boards approve direction and risk appetite. If management rolled out an advice giving AI without proper guardrails, the board can be accused of weak oversight. That theme is already visible in AI related filings, including claims about overstating benefits or understating risks. (Allianz Commercial)

Furthermore, AI failure often triggers a chain reaction: customer harm, regulator interest, media attention, stock drop, then litigation. D&O claims live inside that chain reaction.

The new liability map: from “bad bot” to boardroom

To understand 2026 exposure, picture a simple path.

First, the AI makes a wrong statement. Next, a user relies on it. Then, harm happens or alleged harm happens. After that, someone asks: who approved this system, who monitored it, and what did you disclose?

The most common 2026 claim patterns

One pattern is consumer harm, followed by allegations that the company did not test or monitor the system. Another pattern is investor harm, driven by stock drops after AI incidents or after revelations that AI capabilities were exaggerated.

Meanwhile, regulators are also shaping the risk. The U.S. SEC has already brought enforcement actions tied to misleading AI statements, including “AI washing.” (YouTube) That matters for D&O because securities and disclosure themes are classic D&O territory.

A third pattern is professional harm. If the AI provides medical or financial suggestions, plaintiffs can argue negligence, unfair practices, or product liability like theories. The board may not be sued first. But once the story becomes “leadership ignored obvious risk,” D&O is in play.

What “uncorrelated” risk really means for D&O

People sometimes claim AI risk is “uncorrelated.” That is not true. It correlates with hype cycles, product launches, and cost cutting.

In 2026, firms will be under pressure to automate support, underwriting, triage, and onboarding. That pressure is intense. It can create a fragile decision: speed over controls. Consequently, the most “innovative” firms can also become the most exposed.

D&O insurance basics, explained for this new moment

D&O coverage is a contract with sharp edges. It is not a blanket guarantee. It is a set of promises with definitions, exclusions, and conditions.

The three classic “Sides” and why they matter in AI events

Side A generally responds when the company cannot indemnify individuals. Think bankruptcy or legal limits.

Side B typically reimburses the company when it indemnifies directors and officers.

Side C often covers the entity itself for certain claims, commonly securities claims for public companies.

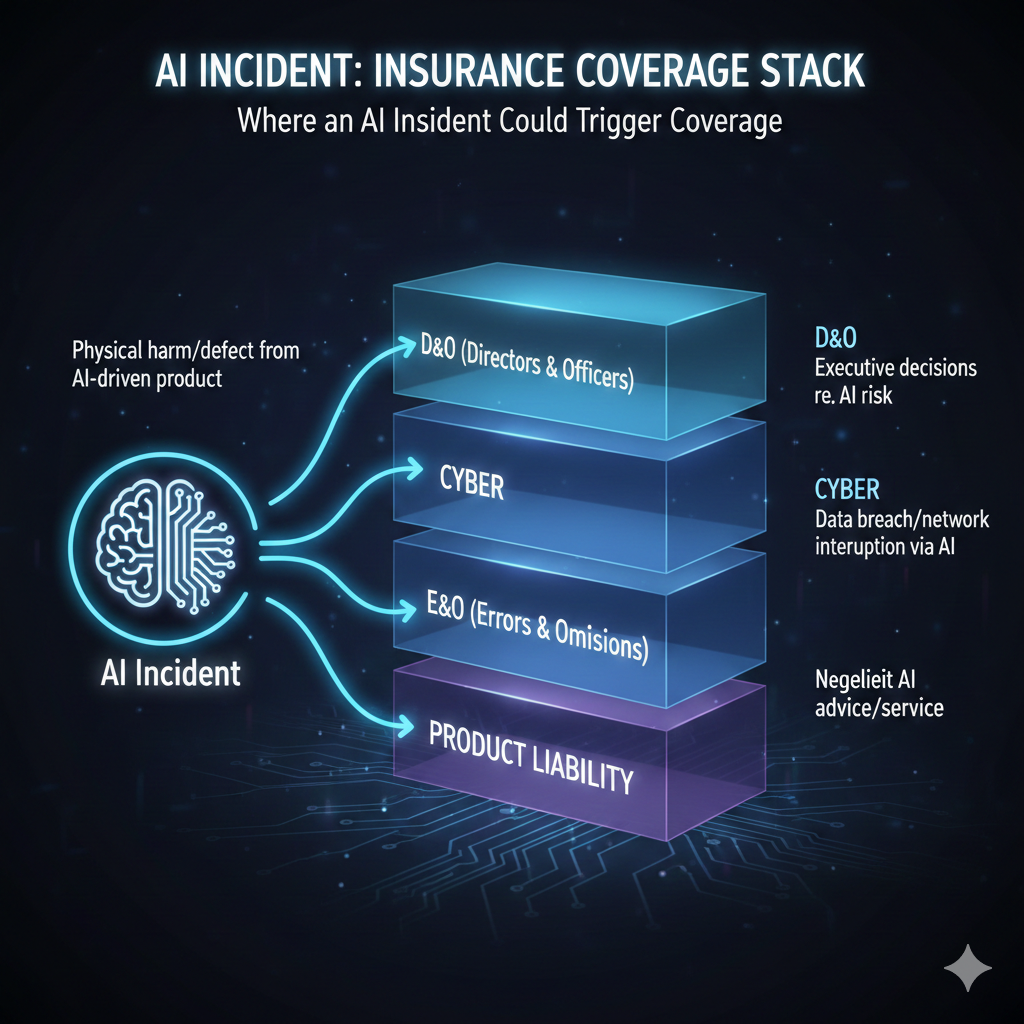

In an AI hallucination scenario, the first fight is often: is it a covered “wrongful act” by insured persons, or is it a product failure? That distinction shapes whether the claim belongs in D&O, E&O, cyber, or product liability coverage.

[YouTube Video]: Clear 2025 explainer of D&O insurance structure and why “Side A/B/C” matters when a crisis hits.

The “claims made” trap that surprises founders

Most D&O policies are claims made. That means timing matters. Notice requirements matter. Wording matters.

Additionally, “interrelated claims” language can bundle separate complaints into one claim. That can be good or bad. It can burn one retention fast. Or it can help keep disputes in one tower.

For AI incidents, timing risk is real. A small complaint today can evolve into a class action later. If the company fails to report early circumstances, coverage fights can follow. That is painful. It is also avoidable with disciplined reporting.

Common exclusions that can swallow AI claims

In 2026, the market trend is moving toward AI specific endorsements and exclusions in some lines. WTW notes insurers are introducing AI specific exclusions or endorsements in some markets, and urges early identification of gaps. (WTW)

Even without an AI specific exclusion, D&O policies often have exclusions that can collide with AI incidents, like fraud exclusions, prior knowledge exclusions, bodily injury exclusions, and professional services exclusions. The details vary by carrier and negotiation. Consequently, the board needs the broker to translate the real coverage, not just the headline limit.

The 2026 coverage battleground: D&O vs E&O vs Cyber

If you deploy AI that “advises,” you are not only doing software. You are providing a service. That service can look professional. It can look medical. It can look financial.

Where D&O ends and professional liability begins

D&O is designed for management acts. E&O is designed for errors in delivering services or products. An AI chatbot that gives investment allocations can push a claim toward E&O. Yet if investors allege the board misrepresented the controls, that can push it back to D&O.

Additionally, cyber policies focus on security incidents, data breaches, ransomware, and sometimes tech errors. If an AI hallucination is tied to compromised data, prompt injection, or a breach, cyber could be triggered too. That overlapping map is messy. Therefore, 2026 risk management is about coordination, not buying one policy and hoping.

The “bodily injury” issue when AI touches health

If an AI gives wrong medical guidance and someone alleges physical harm, many D&O forms have bodily injury exclusions. That can be a brutal surprise. It can shift the fight to other lines. It can also lead to gaps.

That does not mean “no coverage.” It means you must engineer the insurance program deliberately. In 2026, the broker who understands AI will be priceless.

Underwriting in 2026: what insurers will ask, and why

Carriers are not just pricing balance sheets anymore. They are pricing governance.

Allianz highlights that robust governance and risk management are critical as fast evolving areas like AI become harder to quantify. (Allianz Commercial) That is a polite way of saying: underwriters want proof.

The new underwriting questions you should expect

Expect questions like: What AI models do you use? Are they third party or in house? Is there a human review layer for high stakes outputs? How do you monitor errors? How do you log prompts and outputs? What do you disclose to users?

Additionally, underwriters will ask about marketing claims. If you promise “accuracy” or “verified advice,” your risk looks hotter. Consequently, the insurance conversation now touches product language, not just finance.

The “AI exclusion” trend and why it is accelerating

Recent market commentary has noted insurers exploring broader AI related exclusions in liability contexts, driven by fear of mass claims. (Barron’s) Even when exclusions are not absolute, endorsements can narrow coverage for AI related errors.

WTW similarly points out AI specific exclusions or endorsements are emerging in some markets. (WTW)

That trend is a warning. It suggests 2026 is a transition year. The window for negotiating favorable wording may shrink as standardized exclusions spread.

Regulation and policy in 2026: why compliance becomes a board issue

AI regulation is moving from principles to enforcement. That changes D&O risk because failure to comply can become a governance failure.

The EU AI Act timeline that matters for 2026 planning

The European Commission’s guidance on obligations for providers of general purpose AI models notes that obligations apply from August 2, 2025, and that the Commission’s enforcement powers enter into application from August 2, 2026. (Digital Strategy)

Even if a company is not based in the EU, exposure can arise through customers, partners, or distribution. Additionally, the EU approach can influence global norms. Therefore, boards should treat 2026 as a compliance forcing function.

U.S. enforcement signals: “AI washing” and consumer protection

The SEC has brought cases tied to misleading AI statements. (YouTube) That matters because many D&O claims begin with a stock drop after credibility breaks.

Meanwhile, the FTC has launched actions aimed at AI related deception and unfair practices, including “Operation AI Comply.” (Lexology) Even if the specific cases are not about hallucinations, the enforcement posture is clear: do not overpromise, and do not deploy recklessly.

Consequently, 2026 will reward conservative language, strong controls, and clean documentation.

The “hallucination evidence” problem: plaintiffs now have receipts

For years, AI errors were anecdotal. In 2026, they are measurable.

Independent audits, watchdog reports, and public benchmarks are giving plaintiff lawyers a powerful tool: foreseeability. If it is widely known that systems can hallucinate, then “we did not know” becomes a weak defense.

[YouTube Video]: Walkthrough of testing that highlights how often AI answers can be wrong, and why that is a serious governance issue for 2026 deployments.

Why “known limitation” changes the duty of care narrative

Once a risk is known, boards are expected to oversee it. That does not mean zero mistakes. It means reasonable process.

Reasonable process in 2026 looks like: risk classification, testing, monitoring, escalation, and disclosure. It also looks like board level reporting with real metrics.

Additionally, “we used a third party model” will not fully protect leadership. Regulators and courts often view outsourcing as delegating tasks, not delegating accountability. Therefore, vendor due diligence becomes part of board oversight.

The disclosure layer: where D&O claims often ignite

If the company told investors the AI was “safe” or “highly accurate,” then an error wave hits, the gap between promise and reality becomes litigation fuel. Allianz calls out AI washing themes in recent filings. (Allianz Commercial) The SEC’s enforcement posture reinforces the same message. (YouTube)

In 2026, the safest approach is not silence. It is precise language. It is verified controls. It is cautious claims.

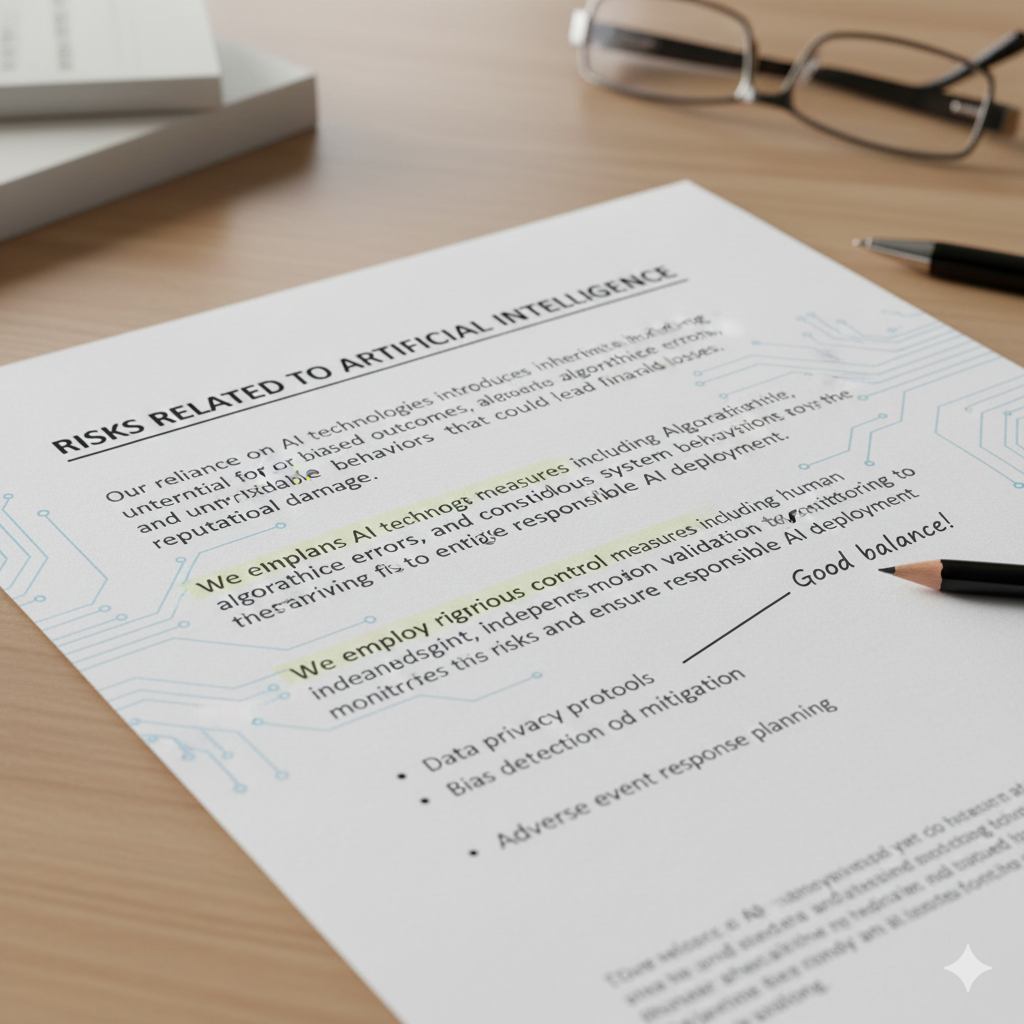

A practical 2026 governance blueprint for boards

A strong board does not try to be the AI engineer. It sets the rules of the road.

Start with a clear AI use map

The first step is to classify where AI speaks, decides, or recommends. A chatbot that drafts marketing copy is lower risk. A bot that suggests medication dosage is high risk. That simple classification is vital.

Then set a rule: higher risk AI requires stronger controls. That sounds obvious. Yet many firms skip it when speed matters.

Adopt an AI risk framework that regulators recognize

Frameworks are not magic. They are a common language. They also show seriousness.

The NIST AI Risk Management Framework (AI RMF 1.0) is widely referenced as voluntary guidance for managing AI risk. (RSI Security) In practice, adopting a recognized framework helps the board ask better questions, document decisions, and show diligence.

Additionally, AI management standards like ISO 42001 are emerging as a governance anchor for organizations building AI systems. (GSC Co.)

Build the “human in the loop” rule into policy

Hallucinations are hardest when nobody checks outputs. So the board should push for a simple policy: if the AI can cause material harm, it needs human review, or it needs hard constraints.

Constraints can include retrieval from verified sources, refusal behavior, and calibrated confidence signals. They can also include “do not answer” triggers. Consequently, the product becomes safer, and the D&O story becomes stronger.

Turn monitoring into a board level dashboard

Monitoring should not be a vague promise. It should be measured.

In 2026, strong firms will track: error rates, complaint rates, escalation counts, “unsafe answer” counts, and model drift signals. They will also track vendor changes and system updates.

Furthermore, they will keep logs that preserve evidence. That helps fix issues. It also helps defend claims.

Buying and negotiating D&O in 2026: how to avoid painful surprises

Insurance buyers often focus on limit and premium. In 2026, wording will matter more.

The questions that unlock real clarity

Ask whether the carrier has an AI exclusion, an AI endorsement, or AI related sublimits. Ask how “professional services” is defined. Ask how “bodily injury” is treated. Ask how “technology services” is treated.

Additionally, push for clarity on investigations coverage. If regulators investigate AI related conduct, the company needs to know whether defense costs are covered and under what triggers.

WTW emphasizes that unclear language should be clarified early and that brokers may look for alternative solutions as AI exclusions appear. (WTW) That advice is practical and urgent.

Why the story you tell underwriters now matters

Underwriters price fear. They discount control.

If you can show a credible AI governance program, you may protect pricing and reduce exclusion pressure. If you cannot, the carrier may respond with higher retention, narrower wording, or both.

Consequently, governance is not only a risk control. It is also a negotiating asset.

The “tower strategy” for AI risk

Some companies will end up with D&O carriers pushing AI out, while E&O and cyber carriers also tighten. That can leave gaps.

In 2026, the best practice is to design the program as one system. D&O, E&O, cyber, and product liability should be coordinated. Coverage counsel can help. Specialist brokers can help too.

Scenario walkthrough: an AI financial bot goes wrong

Imagine a fintech app adds an AI assistant. It suggests investment allocations. A user complains the advice was harmful. Social media amplifies it. Regulators ask questions. Then a short seller publishes a report alleging the company overstated AI safety.

What happens next is predictable.

First, the company faces customer claims. Next, investors allege misrepresentation. Then directors are accused of weak oversight. After that, insurers examine policy wording and notice dates.

This is where preparation becomes powerful.

If the board has documented oversight, clear limitations, and measured monitoring, the narrative is “responsible innovation.” If the board has vague minutes and hype language, the narrative is “reckless rollout.”

Allianz’s D&O report stresses governance tools and expertise as critical for managing AI risks and communicating them to stakeholders. (Allianz Commercial) That is exactly what this scenario demands.

2026 predictions: what will likely change next year

Boards want forecasts, not panic. So here is the balanced, forward looking picture.

Prediction 1: AI specific exclusions spread, then fragment

Some carriers will try broad exclusions. Others will offer narrower endorsements. A few will differentiate with “affirmative coverage” tied to governance standards.

Market commentary already points to insurers not wanting to cover AI errors and to standardized forms aimed at excluding certain AI caused injuries in liability contexts. (Barron’s)

Consequently, 2026 will reward buyers who negotiate early and document controls.

Prediction 2: D&O underwriting becomes more technical

Underwriters will ask for model governance artifacts. They will want incident response playbooks. They will want training and oversight proof.

Additionally, they will pressure marketing teams to tone down claims. That will feel annoying. It will also be protective.

Prediction 3: Regulatory enforcement becomes a bigger trigger than private suits

Private litigation will keep growing. Yet enforcement can be faster and more public.

The EU AI Act’s enforcement powers for GPAI obligations are scheduled to apply from August 2, 2026. (Digital Strategy) That date will sharpen internal urgency for many firms.

In the U.S., the SEC and FTC have already shown willingness to act on misleading AI claims and AI related deception. (YouTube) Those signals will shape board agendas in 2026.

Prediction 4: “AI governance” becomes a disclosure norm

In 2026, investors will ask: what controls do you have? What is the incident rate? How do you test? Do you use third party models?

Companies that answer with confidence and evidence will look trustworthy. Companies that answer with slogans will look fragile.

Furthermore, stronger disclosure may reduce stock drop shock when incidents happen. That can reduce litigation severity.

How to prepare now: the board’s 2026 action plan

Preparation does not require perfection. It requires discipline.

Start by mapping AI use cases and classifying risk. Then adopt a recognized risk framework. Next, require monitoring metrics and regular reporting. After that, align insurance towers and policy wording with the real risk map.

Additionally, stress test the “worst day” scenario. Ask: what if the bot gives wrong medical or financial guidance? Who shuts it down? Who reports it? Who informs customers? Who informs regulators? Who informs investors?

Those questions are not theoretical. They are vital.

Conclusion: the D&O story that wins in 2026

The winning posture in 2026 is not anti AI. It is pro control.

Boards that treat hallucinations as a known limitation will build safety rails. They will document oversight. They will communicate accurately. They will negotiate insurance with eyes open.

However, boards that treat AI as magic will face ugly surprises. They may face exclusions. They may face coverage fights. They may face credible claims that the risk was foreseeable.

D&O insurance can still be a powerful shield. Yet in 2026, it will favor disciplined governance. It will favor verified language. It will favor leaders who can prove they did not just chase the next big thing. They managed it.

Sources and References

- Allianz Commercial: D&O insurance insights 2026 (PDF)

- European Commission: Guidelines for providers of GPAI models (AI Act timeline)

- U.S. SEC: Enforcement actions tied to “AI washing”

- U.S. FTC: Operation AI Comply announcement

- WTW: “Insuring the AI age” (AI exclusions and endorsements)

- D&O Diary: The AI revolution and D&O insurance implications

- Barron’s: Insurers don’t want to cover AI’s errors

- NIST AI RMF guidance overview

- ISO/IEC 42001:2023 AI management system standard (PDF scan)